20 KiB

Open Future: Mapping the Open Territory

A comprehensive exploration of the future of AI and computing through the lens of open versus closed systems

Introduction

AI is changing the laws that once governed computing. We stand at a critical juncture where the choices made today will determine whether AI becomes a force for democratization or concentration. This document explores the evolution of computing, the risks of closed systems, and the promise of an open future.

As Andrej Karpathy said, AI is literally "Software 2.0" - it isn't just an efficiency gain like previous revolutions. AI creates knowledge that we didn't have before and can navigate nearly inconceivable amounts of data and complexity. It will ask questions we didn't even know to ask, destroy previous industries, and create new ones.

The fundamental question we face is whether AI will follow the historical trend of falling costs and broadening access, or whether it will represent the first computing revolution that concentrates rather than democratizes access to technology.

Part 1: How We Got Here - The Evolution of Computing

The Historical Pattern of Computing Revolutions

Until recently, Bell's Law gave us an accurate framework for understanding computing revolutions, stating that each decade a new class of computing emerges, resulting in a fundamental shift in access. This pattern has been remarkably consistent throughout the history of computing.

The progression has been clear and transformative:

- 1950s: Mainframes - Univac, IC Chip technology

- 1960s: Minicomputers - 12-bit PDP-8, DRAM, IBM Anti-trust Lawsuit

- 1970s: Personal Computers - Intel 4004, Minitel, Unix

- 1980s: Browser Era - World Wide Web, Linux, Mozilla

- 1990s: Mobile - iPhone, "Open Source" movement, Ethernet

- 2000s: Cloud - Android, Red Hat IPO, PCIe

- 2010s: AI - ChatGPT3, DeepSeek, RISC-V, Red Hat acquisition by IBM

The Accessibility Revolution

These revolutions allowed us to make computers that were much more accessible, simultaneously driving performance up 10x while also driving cost down 10x. In 1981, a fully loaded IBM PC cost $4,500. Today, an iPhone, which is many millions of times faster, retails for $1,129. Through this process, we became exceptionally good at building very powerful computers with very small chips.

Every shift created new leaders, sidelined old ones, and required adaptation. From a social perspective, these innovations gave many more people access to compute, democratizing technology and expanding opportunities.

The AI Exception: Breaking Bell's Law

However, something different is happening with Artificial Intelligence. Prices aren't dropping with the advent of AI. While cost per math operation is going down, the actual cost of inference per token is still climbing as models are getting larger (e.g., GPT4.5), doing more work (e.g., "reasoning models"), and doing work that is more intensive (e.g., new image generation).

AI datacenters are orders of magnitude more powerful than previous generations, with spending rising by tens of billions year-over-year. Even if we eventually see some cost reductions, it will take time before they reach affordability, leaving everyone besides a few in the dust of the AI revolution.

Why AI is Different

Why is this computer class more expensive? AI is extremely physically intensive, requiring more silicon, more energy, and more resources. From shifting the physics of compute at the transistor level to building out the global infrastructure of AI data centers, this revolution is pushing against the physical limitations of human industry.

This physical intensity creates a fundamental challenge: if Bell's Law breaks fully, AI will be the first computing revolution that doesn't increase access, but instead concentrates it.

Part 2: A Closed World - The Risks of Concentration

Historical Precedent: We've Been Here Before

This isn't the first time we've been presented with a choice between a closed or open future. In fact, we're living in a closed world today because of choices made for us 40+ years ago. Early minicomputer and PC culture was dominated by a hacker ethos defined by "access to computers... and the Hands-On Imperative."

By the late 90s and early 00s, PC development became dominated by Windows and Intel at the cost of limiting innovation while hamstringing competitors and partners alike.

The Economics of Closed Systems

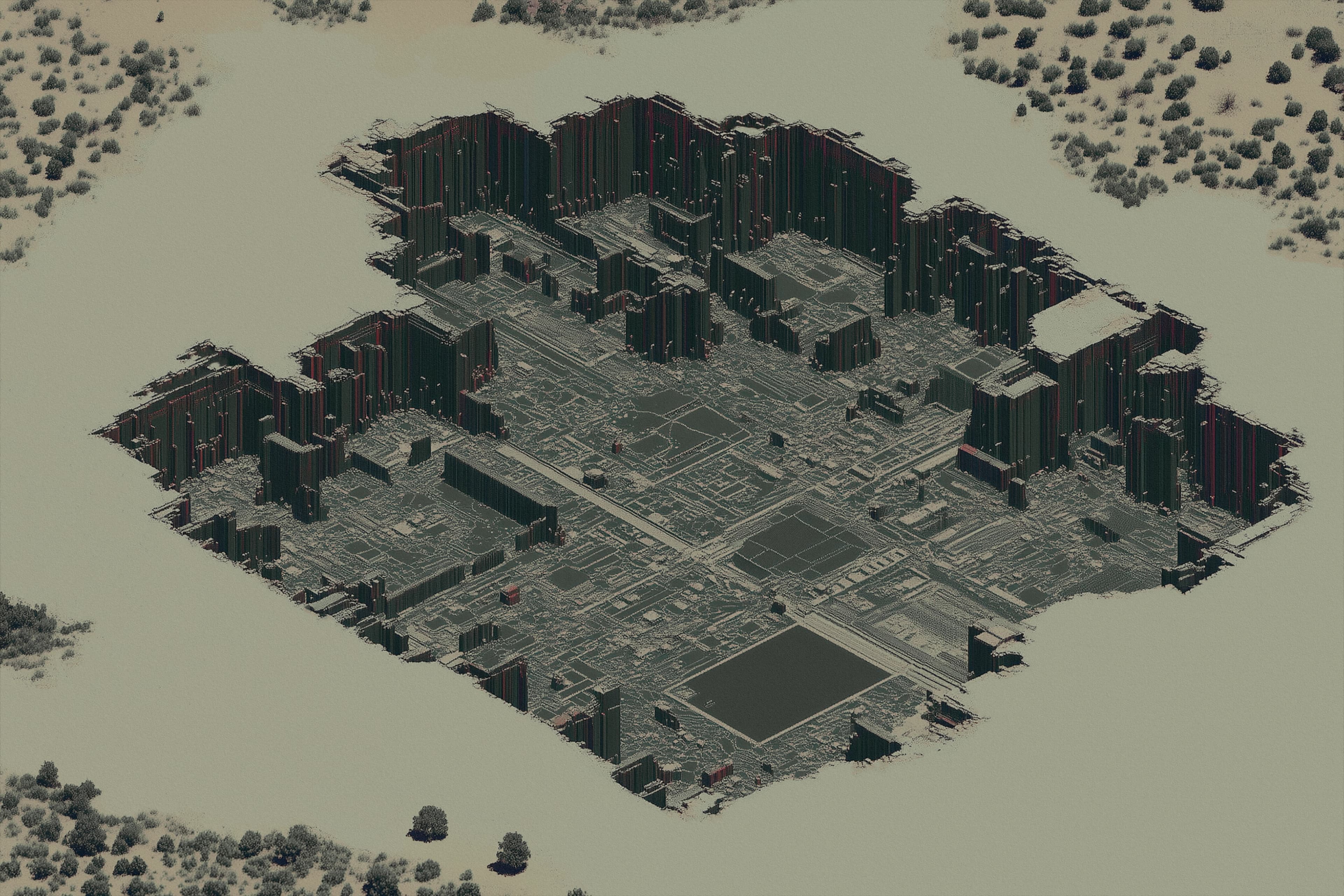

The diagram above illustrates three different models of innovation ownership:

- CLOSED: No leverage or choice in dealings - complete vertical ownership

- PROPRIETARY: No control of roadmap or features while incurring higher development and product costs

- OPEN: You drive and control the future through open foundations and collaborative development

Real-World Examples of Market Concentration

Just look at WinTel's OEM partners, like Compaq, which struggled to hit 5% operating margins in the late 90s, according to SEC filings. Dell, during the same time period, absolutely revolutionized supply chains and typically enjoyed margins around 10%.

Compare this to Microsoft and Intel, which often tripled or quadrupled those figures in the same period, with Microsoft hitting 50.2% margins in 1999. Some have jokingly referred to this as "drug dealer margins." In 2001, Windows had >90% market share, and almost 25 years later, it still has >70% market share.

The Formation of "Swamps"

How do closed worlds form? One word: swamps. A swamp is a moat gone stagnant from incumbents who have forgotten how to innovate.

There are many ways to produce a swamp:

- Overcomplication: Protecting a product by adding unnecessary proprietary systems and layers of abstraction

- License Fees: Charging rents in the form of licensing costs

- Feature Bloat: Piling on features just enough to justify upgrades while staying disconnected from actual needs

- Bundling: Offering something "for free" as an inseparable part of a bundled service to lock out competition

However it happens, what started as innovation becomes just an extra tax on the product, erecting monopolies instead of creating real value. These companies become incentivized to preserve the status quo rather than changing.

The AI Concentration Risk

Today, many companies are forced into choosing closed systems because they don't know of, or can't imagine, an alternative. Industry leaders see the sector as a tight competition between a few established incumbents and a handful of well-funded startups. We're seeing consolidation in the market, accompanied by a huge increase in total market value.

If Bell's Law breaks fully, AI will be the first computing revolution that doesn't increase access, but instead concentrates it. We saw hints of this concentration effect with the previous computer class. Jonathan Zittrain argues that the cloud has put accessibility at risk, leaving "new gatekeepers in place, with us and them prisoner to their limited business plans and to regulators who fear things that are new and disruptive."

Unlike hyperscalers before it, AI threatens to tip consolidation into full enclosure.

The Stakes: A Referendum on Society's Future

If AI eats everything, like software has eaten everything, this means that open versus closed is a referendum on the future shape of society as a whole. A handful of companies will own the means of intelligence production, and everyone else will purchase access at whatever price they set. As many have warned, this will represent a new form of social stratification.

It is clear to us that open is existential.

Part 3: An Open World - The Promise of Open Systems

The Infiltration Power of Open Source

Open source has a way of infiltrating crucial computing applications. The internet runs on it. The entire AI research stack uses open source frameworks. Even proprietary tech relies on it, with 90% of Fortune 500 companies using open source software. There wouldn't be macOS without BSD Unix, Azure without Linux, or Netflix without FFmpeg.

Historical Success of Open Standards

Open source and its hardware equivalent, open standards, have repeatedly catalyzed mass adoption by reducing friction and enabling interoperability:

- Ethernet: Robert Metcalf says the openness of ethernet allowed it to beat rival standards

- DRAM: Enabled the mass adoption of PCs with high-capacity, low-cost memory

- PCIe: Enabled high-speed interoperability of PC components

- Open Compute Project: Used by Meta and Microsoft among others, standardized rack and server design so components could be modular and vendor-agnostic

RISC-V: The Hardware Equivalent of Linux for AI

RISC-V is the hardware equivalent of Linux for AI hardware. It launched in 2010 at UC Berkeley as a free, open standard alternative to proprietary architectures like Intel's x86 and ARM.

Key advantages of RISC-V:

- Open Nature: Allows deep customization, making it especially desirable for AI and edge computing applications

- Royalty-Free: No licensing costs or restrictions

- Growing Adoption: Companies from Google to Tenstorrent are adopting it for custom silicon

- Flexibility: Its ISA (Instruction Set Architecture) is gaining incredible adoption across the industry

The Global Talent Pool Advantage

Open systems also attract a global talent pool. Linux itself is the shining example of this, constructed by thousands of engineers, with significant contributions coming both from independent outsiders and employees of major players like Intel and Google.

This collaborative approach creates several benefits:

- Diverse Perspectives: Contributors from around the world bring different viewpoints and solutions

- Rapid Innovation: Multiple teams working on problems simultaneously accelerates development

- Quality Assurance: More eyes on the code means better security and fewer bugs

- Knowledge Sharing: Open development spreads expertise across the entire community

The Default State of Technology

We believe open is the default state – what remains when artificial boundaries fall away. The only question is how long those boundaries hold, and how much progress will be delayed in the meantime.

But we can't assume that we'll return to the historical trend of falling costs and broadening access. We're at a critical juncture. As companies build out their AI stack, they are making a choice today that will determine the future. Companies can invest in closed systems, further concentrating leverage in the hands of a few players, or they can retain agency by investing in open systems, which are affordable, transparent, and modifiable.

The AI Stack: Current Reality vs. Open Future

The Current State: Closed Today

Today, parts of the AI stack are open, parts are closed, and parts have yet to be decided. Let's examine the current state across the different layers:

Hardware Layer

Status: CLOSED

Most hardware today is a black box, literally. You're reliant on a company to fix, optimize, and, at times, even implement your workloads. This creates several problems:

- Vendor Lock-in: Organizations become dependent on specific hardware vendors

- Limited Customization: Unable to optimize hardware for specific use cases

- High Switching Costs: Moving between vendors requires significant investment

- Innovation Bottlenecks: Progress limited by vendor roadmaps and priorities

Low-Level Software Layer

Status: CLOSED

Most parallelization software is proprietary, causing unnecessary lock-in and massive switching costs:

- Proprietary APIs: Vendor-specific programming interfaces

- Limited Portability: Code written for one platform doesn't easily transfer

- Optimization Constraints: Unable to modify software for specific needs

- Dependency Risks: Reliance on vendor support and updates

Models Layer

Status: MIXED

Models present a complex landscape, but most leading ones are closed:

- Leading Models: GPT-4, Claude, and other state-of-the-art models are proprietary

- Open Models: Available but often with limited data, little support, and no guarantees of remaining open

- Training Data: Most closed models use proprietary training datasets

- Future Uncertainty: Open models may become closed as companies seek monetization

Applications Layer

Status: CLOSED

Even applications using open source models are typically built using cloud platform APIs:

- Data Pooling: Your data is being used to train next-generation models

- API Dependencies: Applications rely on cloud services for functionality

- Privacy Concerns: User interactions contribute to model improvement

- Control Loss: Limited ability to modify or customize application behavior

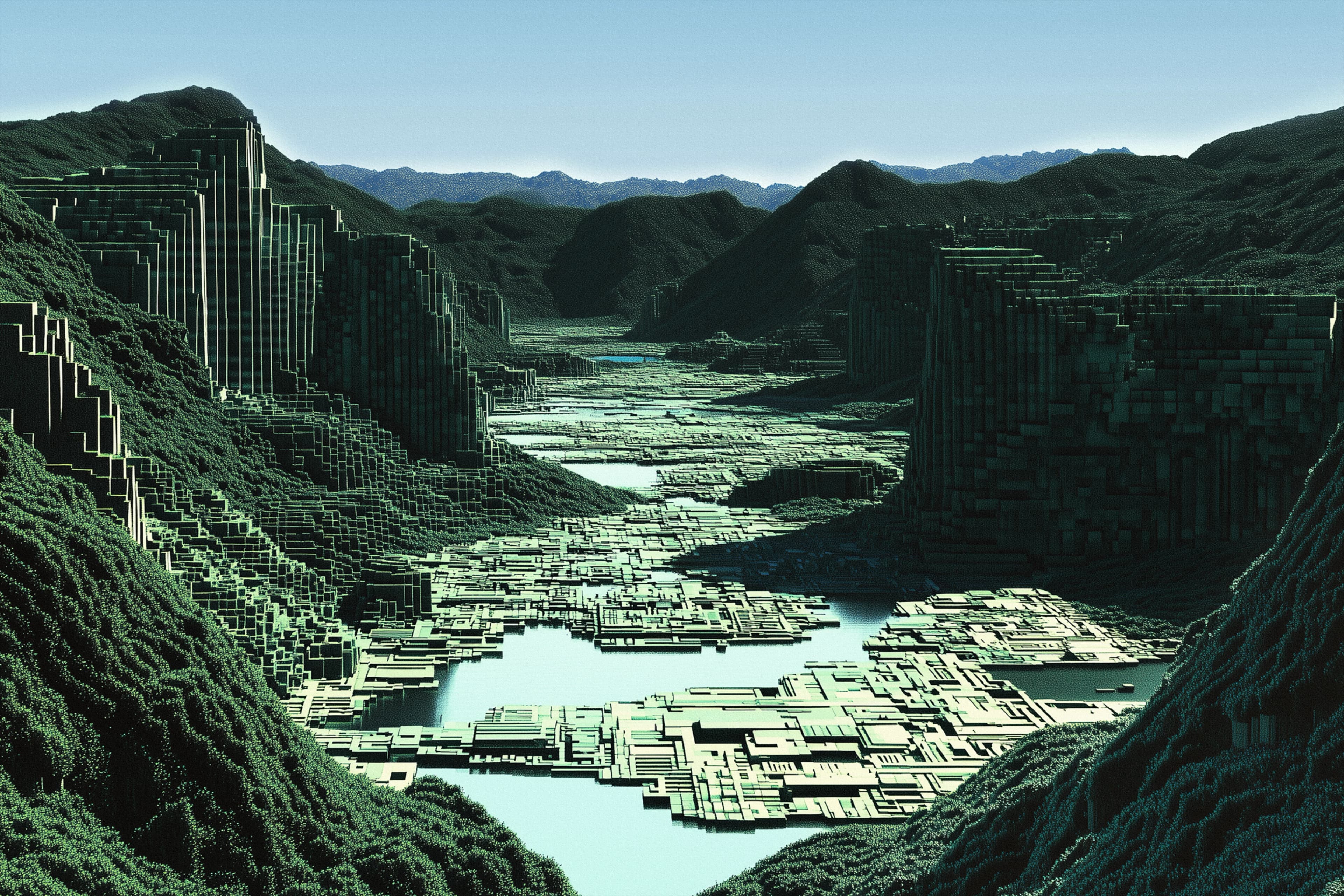

The Vision: Open Future

The open future represents a fundamental shift where all layers of the AI stack become open, collaborative, and user-controlled. This transformation would create:

Open Hardware

- RISC-V Adoption: Open instruction set architectures enabling custom silicon

- Modular Design: Interoperable components from multiple vendors

- Community Development: Collaborative hardware design and optimization

- Cost Reduction: Competition and standardization driving down prices

Open Software Stack

- Open Parallelization: Community-developed software for distributed computing

- Portable Code: Applications that run across different hardware platforms

- Transparent Optimization: Ability to modify and improve software performance

- Collaborative Development: Global community contributing to improvements

Open Models

- Transparent Training: Open datasets and training methodologies

- Community Models: Collaboratively developed and maintained AI models

- Customization Freedom: Ability to fine-tune and modify models for specific needs

- Guaranteed Openness: Governance structures ensuring models remain open

Open Applications

- User Control: Applications that respect user privacy and data ownership

- Local Processing: Ability to run AI applications without cloud dependencies

- Customizable Interfaces: Applications that can be modified for specific use cases

- Data Sovereignty: Users maintain control over their data and its usage

The Domino Effect of Opening Hardware

Opening up AI hardware, with open standards like RISC-V, and its associated software would trigger a domino effect upstream. It would enable "a world where mainstream technology can be influenced, even revolutionized, out of left field."

This means a richer future with more experimentation and more breakthroughs we can barely imagine today, such as:

- Personalized Cancer Vaccines: AI-driven medical treatments tailored to individual patients

- Natural Disaster Prediction: Advanced modeling for early warning systems

- Abundant Energy: AI-optimized renewable energy systems and distribution

- Educational Democratization: Personalized learning systems accessible globally

- Scientific Discovery: AI assistants accelerating research across all disciplines

And this world gets here a lot faster outside of a swamp.

Conclusion: The Choice That Defines Our Future

The Silicon Valley Paradox

There's an old Silicon Valley adage: "If you aren't paying, you are the product." In AI, we've been paying steeply for the product, but we still are the product. We have collectively generated the information being used to train AI, and we're feeding it more every day.

This creates a fundamental paradox: we're both the customers and the raw material for AI systems, yet we have little control over how these systems develop or how they're used.

The Stakes: Who Owns Intelligence?

In a closed world, AI owns everything, and that AI is owned by a few. This concentration of power represents more than just market dominance – it's about who controls the means of intelligence production in the 21st century.

The implications are profound:

- Economic Control: A handful of companies setting prices for access to intelligence

- Innovation Bottlenecks: Progress limited by the priorities and capabilities of a few organizations

- Social Stratification: New forms of inequality based on access to AI capabilities

- Democratic Concerns: Concentration of power in private entities with limited accountability

The Open Alternative

Opening up hardware and software means a future where AI doesn't own you. Instead:

- Distributed Innovation: Thousands of organizations and individuals contributing to AI development

- Competitive Markets: Multiple providers driving down costs and improving quality

- User Agency: Individuals and organizations maintaining control over their AI systems

- Transparent Development: Open processes that can be audited and understood by the community

The Critical Juncture

We stand at a critical juncture in the history of computing. The decisions made today about AI infrastructure will echo for decades to come. Companies building out their AI stack are making choices that will determine whether we get:

A Closed Future:

- Concentrated power in the hands of a few tech giants

- High costs and limited access to AI capabilities

- Innovation controlled by corporate priorities

- Users as products rather than empowered participants

Or an Open Future:

- Democratized access to AI tools and capabilities

- Competitive innovation driving rapid progress

- User control and privacy protection

- AI as a tool for human flourishing rather than corporate control

The Path Forward

The writing is on the wall for AI. We are veering towards a closed world where the constellation of technology companies are fighting over scraps. Competition, innovation, and sustainable business can't thrive in this low-oxygen environment.

But there is another path. By choosing open standards like RISC-V, supporting open source AI frameworks, and demanding transparency in AI development, we can ensure that the AI revolution follows the historical pattern of democratization rather than concentration.

A Call to Action

The choice is not just for technology companies – it's for everyone who will be affected by AI, which is to say, everyone. We must:

- Support Open Standards: Choose products and services built on open foundations

- Demand Transparency: Require visibility into how AI systems work and make decisions

- Invest in Open Development: Fund and contribute to open source AI projects

- Advocate for Open Policies: Support regulations that promote competition and openness

- Build Open Communities: Participate in collaborative development of AI technologies

The Default State

We believe open is the default state – what remains when artificial boundaries fall away. The only question is how long those boundaries hold, and how much progress will be delayed in the meantime.

The future of AI – and by extension, the future of human society in the age of artificial intelligence – depends on the choices we make today. We can choose a future where AI serves humanity broadly, or we can accept a future where humanity serves AI's corporate owners.

The choice is ours, but we must make it now.

This document is based on content from OpenFuture by Tenstorrent, exploring the critical importance of open systems in the age of artificial intelligence.